Locked-in syndrome (LIS) is a rare neurological disorder that causes a severe paralysis of voluntary muscles, resulting in total loss of motor ability, including speech. A form of aphasia, LIS leaves patients unable to formulate language because of damage to specific brain regions. In many cases, the patients are fully conscious and aware of the world around them, but the only physical functionality they have left is the ability to blink or move their eyes in vertical motions – hence, they are ‘locked inside’ their own bodies.

LIS can be a result of traumatic brain injury, neural damage, or stroke – making it impossible to detect ahead of time or even treat. While partial recovery of muscle control is possible, physical therapy, nutritional support, and prevention of complications such as respiratory infections are often the only way LIS patients can be helped.

It is estimated that LIS affects about 1% of all people who have suffered a stroke. In the Philippines, strokes are the second leading cause of death, representing close to 14% of all deaths in 2018 alone. For this reason, LIS is a major health risk for the 35% of the total population suffering from some form of cardiovascular disease in the Philippines.

In 2019, a group of Electronics Engineering students University of Santo Tomas, led by Jay Patrick M. Nieles and supervised by Engr. Seigfred V. Prado, M.Res. NT, M.Sc. ELEG, proposed an experimental brain-computer typing interface an experimental brain-computer typing interface that leverages AI to communicate with patients suffering from LIS and improve their quality of life.

Scanning and coding brain signals

There are many systems that help patients overcome their physical limitations to activate voice boxes, virtual keyboards, and wheelchair-controlling programs. But to work properly, these systems require patients to hold their gaze in a stable and consistent manner.

In the case of LIS patients, eye movements tend to be unstable and inconsistent, which means they cannot accurately translate patients’ intentions. Instead of relying on unpredictable physical cues, Jay Patrick M. Nieles’ system is based on reading accurate and reproductible brain signals.

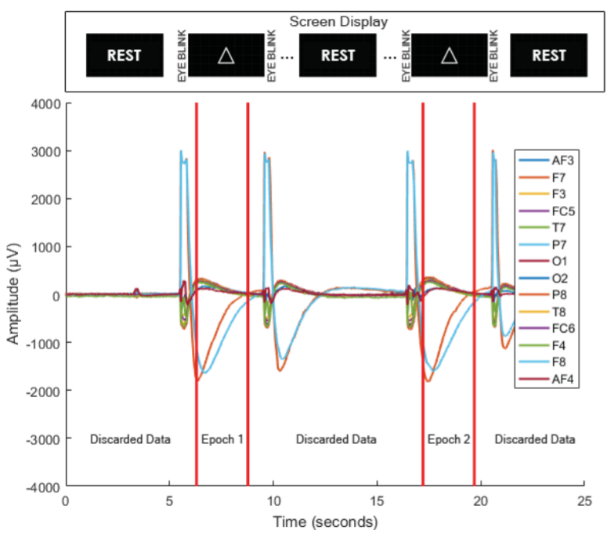

The first step was to get patients to imagine basic geometric shapes and letters. Using Electroencephalogram (EEG) scanners, their brain signals were recorded every time they ‘saw’ shapes and letters in their minds. A machine-learning algorithm was then deployed to identify, classify, and organize the brain signals according to their corresponding shapes or letters.

EEG data is usually decoded through a three-step data-processing mechanism, followed by feature extraction and classification methods – a long and complicated process that must be examined and overseen by brain imaging experts every step of the way.

In this case, the deep learning model allows the EEG signals to be decoded from the raw data in a completely autonomous manner. In doing so, the AI system not only allows the second and third steps of the traditional process to be combined – streamlining steps and reducing reliance on expert knowledge – but also helps achieve more accurate results.

In another study, Nieles and team demonstrated that the decoding of EEG signals using deep learning yielded an accuracy and precision rate of 89.44% and 90.51% respectively, compared to the traditional process, which demonstrated lower accuracy and precision rates of 83.06% and 83.73%.

Transforming thoughts into actions

The second step in this process is the transformation of processed brain signals into words and commands. The brain scans are collected and organized into a personalized ‘glossary’ of brain waves that translate a specific signal into a specific shape, letter, or command – effectively allowing patients to communicate a word or idea just by thinking about them.

If, for example, the brain signal for a triangle shape is associated to the word ‘thirsty’, then a LIS patient can quickly and clearly communicate a need by thinking of a triangle. Likewise, after some training the patient can spell out entire words letter by letter, thinking of them in sequence and in line with the pre-defined collection of categorized brain signals.

The study is one of the first to leverage the electrical impulses created by one’s thinking, demonstrating that AI can help turn abstract, intangible signals into real and concrete actions.

Fostering brain-AI synergies

If prototyped and developed at scale, the system could help the hundreds of thousands of people affected by locked-in syndrome worldwide, as well as open new doors for research on AI-brain synergies. Indeed, this specific use case focuses on typing interfaces, but there is no reason it could not be applied to other types of platforms.

AI algorithms that allow cognitive activity to be decoded into synthetic speech or text can help transform the way humans and machines communicate with each other. For example, they can improve the way robotic limbs are controlled through brain waves, or even accelerate the treatment of a range of neurodegenerative conditions.